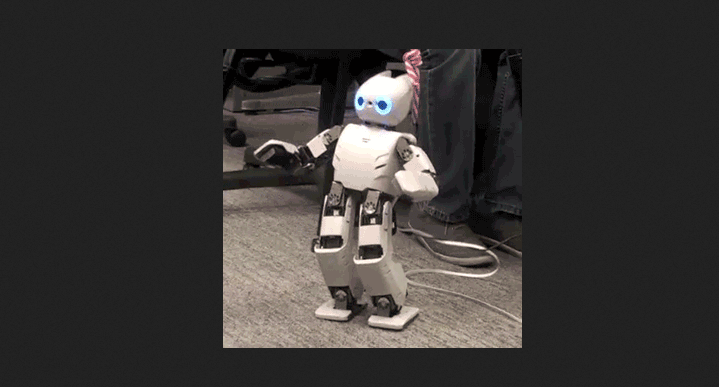

Instead of being programmed, a robot uses brain-inspired algorithms to “imagine” doing tasks before trying them in the real world.

Darwin’s motions are controlled by several simulated neural networks—algorithms that mimic the way learning happens in a biological brain as the connections between neurons strengthen and weaken over time in response to input. The approach makes use of very complex neural networks, which are known as deep-learning networks, which have many layers of simulated neurons.

For the robot to learn how to stand and twist its body, for example, it first performs a series of simulations in order to train a high-level deep-learning network how to perform the task—something the researchers compare to an “imaginary process.” This provides overall guidance for the robot, while a second deep-learning network is trained to carry out the task while responding to the dynamics of the robot’s joints and the complexity of the real environment. The second network is required because when the first network tries, for example, to move a leg, the friction experienced at the point of contact with the ground may throw it off completely, causing the robot to fall.

The new technique could prove useful for any robot working in all sorts of real environments, but it might prove especially useful for more graceful legged locomotion. The current approach is to design an algorithm that takes into account the dynamics of a process such as walking or running.